Skywatch: Agricultural Monitoring Drone

Role: Founder & Systems Engineer

What is Skywatch?

Skywatch is an agricultural monitoring system I developed that uses drones equipped with computer vision to help farmers detect pests, weeds, and monitor livestock. The project combines hardware engineering with machine learning to create a practical solution for modern farming challenges.

The system uses a YOLOv8 neural network running on a Raspberry Pi 4 to analyze images captured by the drone's camera in real-time (~12 FPS). When pests or livestock are detected, their GPS coordinates are automatically sent to a web dashboard where farmers can track and respond to issues quickly.

The Skywatch agricultural monitoring drone

The Skywatch agricultural monitoring drone

Research Background

I conducted farmer surveys to understand the main challenges in agriculture. Four key problems emerged:

- Ineffective weed control methods - Current manual detection is slow and often inaccurate

- Pest damage to crops - By the time pests are spotted, significant damage has occurred

- Declining productivity and rising costs - Labor-intensive monitoring is expensive

- High management and monitoring expenses - Traditional surveillance methods don't scale

These issues led me to develop Skywatch as an automated solution that could monitor large areas efficiently and detect problems early.

Technical Implementation

Hardware Components

The drone is built with several key components working together:

- Mamba F45 Flight Controller - Provides flight stability and GPS integration

- ESC Mamba F55 Pro - Controls motor speeds for stable flight and maneuvering

- Brushless Motors - Deliver sufficient thrust across different speed ranges

- HD Camera - Captures high-quality images for computer vision analysis

- GPS Sensors - Enable precise positioning for accurate field mapping

- Raspberry Pi 4 - Acts as the onboard computer, processing AI models in real-time

Machine Learning and Computer Vision

The core intelligence of Skywatch comes from a YOLOv8 neural network trained specifically for agricultural detection tasks.

Why YOLOv8? I chose this architecture because it can process images in a single pass, making it much faster than alternatives like R-CNN. For a drone system that needs real-time performance, this speed advantage is crucial.

Training Process:

- Dataset: 2,103 images including livestock, crops, pests, and weeds

- Architecture: YOLOv8 optimized for agricultural objects

- Training parameters: 50 epochs, batch size 16, learning rate 0.001

- Performance: Achieved 87% F1-score in object detection

- Optimization: Converted to ONNX format for efficient inference on Raspberry Pi

The model can identify multiple object types simultaneously and provides bounding box coordinates along with confidence scores for each detection.

Software Architecture

I developed the drone control software using multiple programming languages, each chosen for specific tasks:

- Python: Handles data processing, YOLOv8 model inference, web communication, and sensor data management

- C++: Manages real-time flight control operations, including PID controllers and Kalman filtering

- Bash/Shell: Simple automation scripts for startup/shutdown and command execution

Web Platform

The monitoring dashboard is built with:

- Next.js: Provides server-side rendering for fast page loads

- Shadcn UI: Creates a clean, intuitive interface for farmers

- Node.js: Handles backend API requests and drone communication

The web dashboard showing real-time environmental data including temperature, humidity, and other key metrics

The web dashboard showing real-time environmental data including temperature, humidity, and other key metrics

Control Systems

PID Controller

Implements Proportional-Integral-Derivative control for stable flight by adjusting motor speeds based on the difference between desired and actual states (altitude, direction).

Kalman Filter

Provides accurate state estimation by combining sensor data from IMU and GPS sensors, filtering out noise for precise navigation.

Key Features

The Skywatch system provides several monitoring and detection capabilities:

Real-time Pest Detection: Automatically identifies and classifies agricultural pests as they appear in the field

Weed Monitoring: Detects unwanted vegetation with precise location mapping

Livestock Tracking: Monitors animal locations using GPS coordinates for farm management

Crop Health Assessment: Analyzes field conditions to assess overall crop health

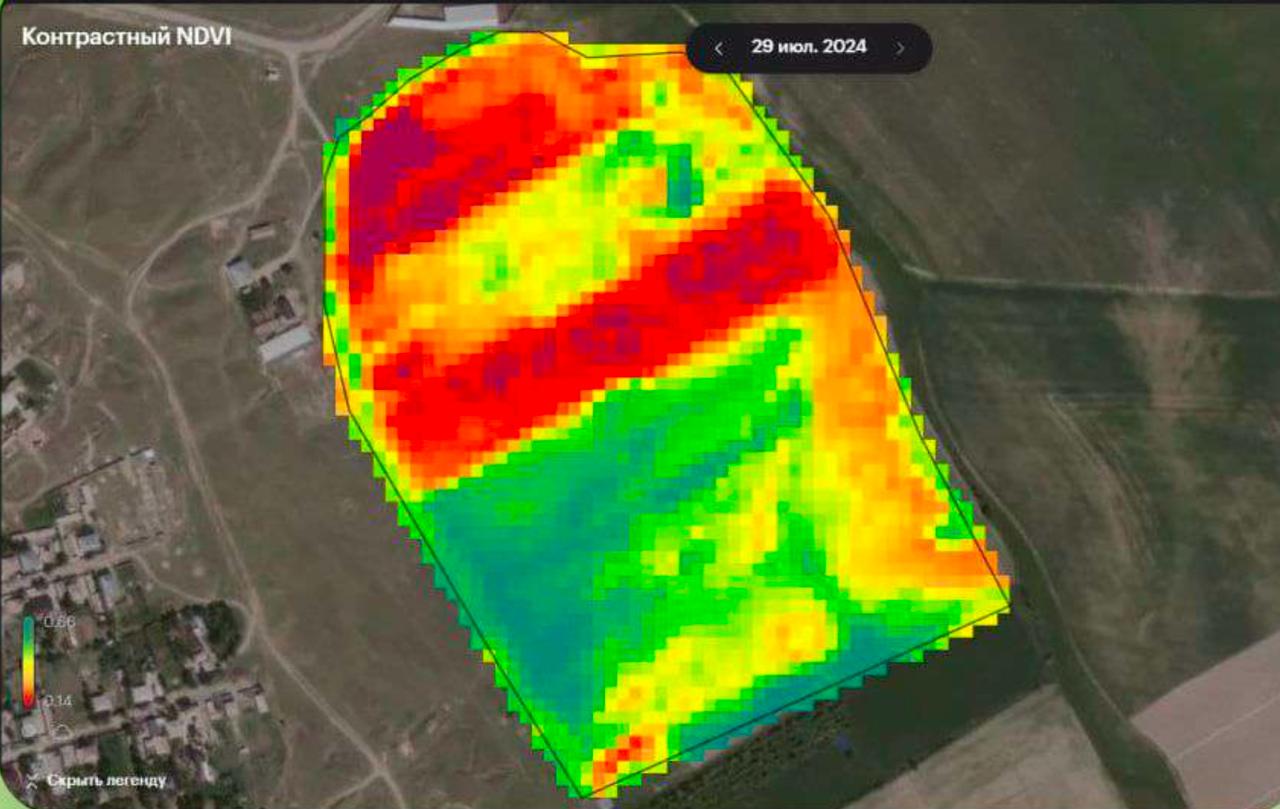

NDVI (Normalized Difference Vegetation Index) analysis showing crop health mapping using satellite imagery

NDVI (Normalized Difference Vegetation Index) analysis showing crop health mapping using satellite imagery

Resource Optimization: Provides data to help optimize water and chemical resource usage

Wildlife Monitoring: Tracks wildlife presence to help protect crops from animal damage

Field Testing and Results

I conducted extensive field tests to evaluate the system's performance in real agricultural conditions:

Detection Performance:

- Successfully detected and classified multiple pest species

- Achieved 87% F1-score accuracy in object detection

- Provided real-time GPS coordinates for all detected objects

- Integrated seamlessly with existing farm management workflows

Technical Achievements:

- Real-time HD video processing on edge hardware (Raspberry Pi 4)

- Stable flight control with 1-2m positioning accuracy validated across 15+ test flights

- Weather-resistant design suitable for various outdoor conditions

- Modular software architecture allowing easy feature additions

The system demonstrated significant improvements in monitoring efficiency compared to traditional manual inspection methods.

Project Impact

Skywatch addresses several critical challenges in modern agriculture:

Cost Reduction: Automated monitoring reduces the need for manual field inspections, saving labor costs and time

Early Detection: Real-time pest and weed identification allows for faster response times, potentially preventing larger infestations

Precision Agriculture: GPS-based detection enables targeted treatment of specific areas rather than blanket application of pesticides

Data-Driven Decisions: Continuous monitoring provides farmers with detailed field data to inform management decisions

Scalability: The drone system can cover large areas more efficiently than ground-based inspection methods

Future Development

Several improvements and extensions are planned for the Skywatch platform:

- Enhanced AI Models: Training on larger datasets to improve detection accuracy for more pest and weed species

- Weather Integration: Incorporating weather data to optimize flight scheduling and predict optimal monitoring times

- Satellite Data: Combining drone imagery with satellite data for broader area coverage

- API Development: Creating interfaces for integration with existing farm management software

Recognition

This project was developed for the National School Science Fair and demonstrates practical applications of AI and robotics in agriculture. The research shows how emerging technologies can address real-world problems in farming and food production.

The work involved extensive collaboration with local farmers to understand their needs and validate the system's effectiveness in actual field conditions.

Skywatch represents an integration of hardware engineering, machine learning, and agricultural science to create a practical solution for modern farming challenges. The project demonstrates how students can develop meaningful technology that addresses real-world problems.